- Activant's Greene Street Observer

- Posts

- Voice Agents 2.0

Voice Agents 2.0

From “Sounds Human” to “Thinks Human”

When we first published Conversational AI and the Future of Customer Service in early 2024, the market thesis was centered on realism: Could generative AI replicate natural, multi-turn dialogue well enough to move beyond clumsy Interactive Voice Response (IVR) systems and high-turnover human call centers? At the time, we focused on how AI would disrupt the $314 billion call center market by achieving human parity in sound and flow. Almost two years later, that feels outdated. Infrastructure improvements have pushed response latencies below 300 milliseconds, aligning AI interaction with natural human speech. The standard for realism itself has elevated significantly and what seemed ambitious is now table stakes.

What began as a customer service tool is developing into a cross-industry infrastructure shift. Industries including healthcare, finance, retail, gaming, translation, education, and automotive are integrating voice capabilities. Even Wimbledon has adopted electronic line-calling technology that uses voice systems to call “out” or “fault” on the courts. However, a critical tension has surfaced: while consumer-facing AI applications proliferate, enterprise adoption for core processes remains measured and cautious. The reason for this gap will define the next phase as the competitive focus migrates from “sounds human” to “thinks human.”

Differentiation no longer comes from voice quality alone; it comes from memory, context-awareness, and verifiable governance. In this report, we revisit our initial assumptions and explore where true, defensible value is accruing.

A note on terminology: While often called "chatbots," that term fails to capture the complexity of systems integrated into enterprise workflows. We will use "voice agents" to describe AI that handles complex tasks, memory, and actions.

Revisiting Our 2024 Thesis—Hype vs. Enterprise Reality

Our initial analysis predicted consolidation and easier technological adoption. The market, however, proved more nuanced. Enterprise buyers, wary of brand risk and integration complexity, have prioritized control over speed, reshaping go-to-market strategies and technology moats.

Thesis Revisited: Adoption Pacing and the Wedge Strategy

Initial Expectation: Full automation would rapidly displace human agents in customer-facing roles.

Market Reality: Augmentation has taken precedence over full automation. The vision of fully autonomous agents ran into the high stakes of brand reputation. McDonald’s, for example, faced a public relations headache when viral videos exposed incorrect orders from its automated system.

Enterprises have learned to separate ambition from deployment reality. A 2024 Gartner survey revealed significant consumer apprehension, with many fearing AI would make it harder to reach a human. Consequently, leaders are implementing Voice AI through a wedge strategy: targeting high-volume, lower-risk use cases first. This typically includes after-hours support, overflow call routing, and internal agent assistance (e.g. summaries and recommendations). This strategy works because it delivers immediate ROI without jeopardizing core operations. For instance, startups like Toma target automotive dealerships where 56% of leads arrive after hours. By capturing and qualifying these leads instead of letting them go to voicemail, the voice agent demonstrates clear value, building internal trust for future expansion. Since most enterprise adoption paths are multi-modal, the wedge strategy provides an entry point that expands into omni-channel automation and ultimately positions the voice agent as the system of record.

Yet despite these gains, board-level concerns persist. In July 2025, the World Economic Forum reported that as AI becomes more agentic in enterprise operations, issues of trust, explainability, data control, and regulatory compliance continue to delay adoption.

Thesis Revisited: Market Consolidation and Switching Costs

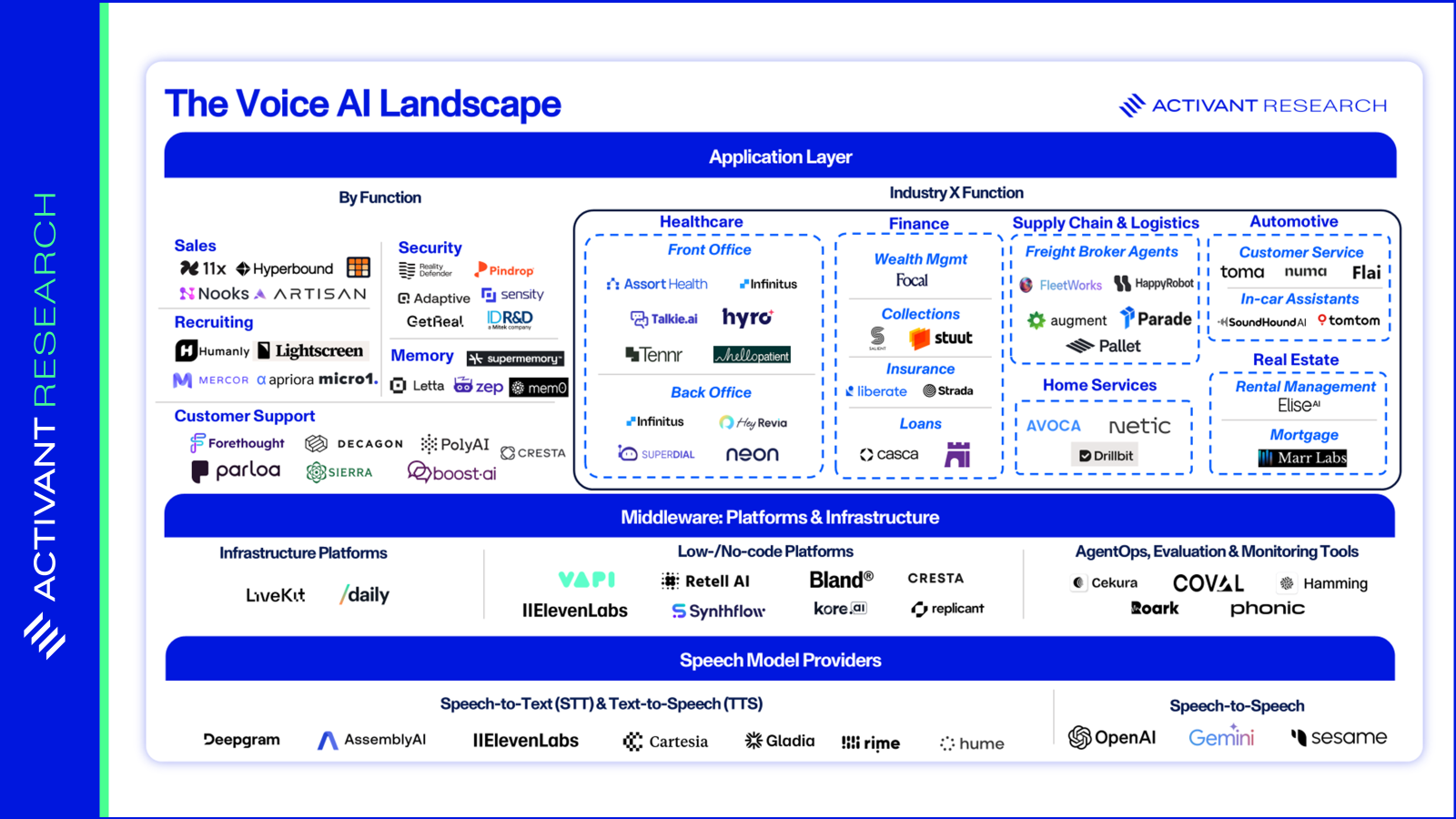

Initial Expectation: The market would consolidate rapidly, with category leaders emerging and as many as half the players on our initial market map disappearing within a year.

Market Reality: Consolidation has been slower than anticipated. According to CB Insights, most Voice AI companies are still iterating on product rather than achieving true scale. The field remains fragmented, buoyed by broad definitions of the category and steady VC funding (Voice AI companies raised $371M in equity funding by July, matching the total for all of 2024 within just the first seven months of the year).

The primary barrier to consolidation is not a lack of M&A interest, but high switching costs. Our initial thesis assumed stack modularity would simplify migration. However, enterprise adoption continues to move at the speed of IT, not the speed of innovation. Activant’s expert network notes that while an SMB might switch vendors in weeks, large enterprise migrations stretch from eight to twelve months. In regulated sectors like healthcare, compliance verification can add another two to four months.

These barriers are less contractual than technical and operational. Systems are deeply coupled with provider-specific IDs and workflow logic. Re-implementation requires re-training staff and sacrificing operational knowledge built around a specific vendor’s quirks. As one product head noted in an expert call, "The longer you stay with one vendor, the more cost-effective it becomes to continue."

The bottom line: enterprises are moving cautiously, redefining defensibility and pointing us toward the next phase of value creation in Voice AI.