- Activant's Greene Street Observer

- Posts

- Open Source Generative AI

Open Source Generative AI

From OpenAI to open source AI: how it unbundles the AI stack

Will the best Generative AI models be open or closed source 10 years from now? We believe that is the wrong question. Rather, how do you build the best AI system, and which models (plural) does it make use of?

In today’s AI paradigm, closed source models built by research labs with $10bn+ budgets dominate benchmarks and leaderboards. However, open source models are rarely far behind, typically matching performance within 6 months. This performance parity matters because history shows that demand for cost, performance and customizability will push the balance toward open source, but only if these models can keep up.

With techniques like fine-tuning and quantization, open source models can outperform massive closed source models on cost, latency and task-specific performance, all while supporting data security and regulatory compliance. Developers want to work with open source, and they don’t need to compromise. Combining multiple fine-tuned, open source models into compound AI systems can beat monolithic closed source models at every dimension.

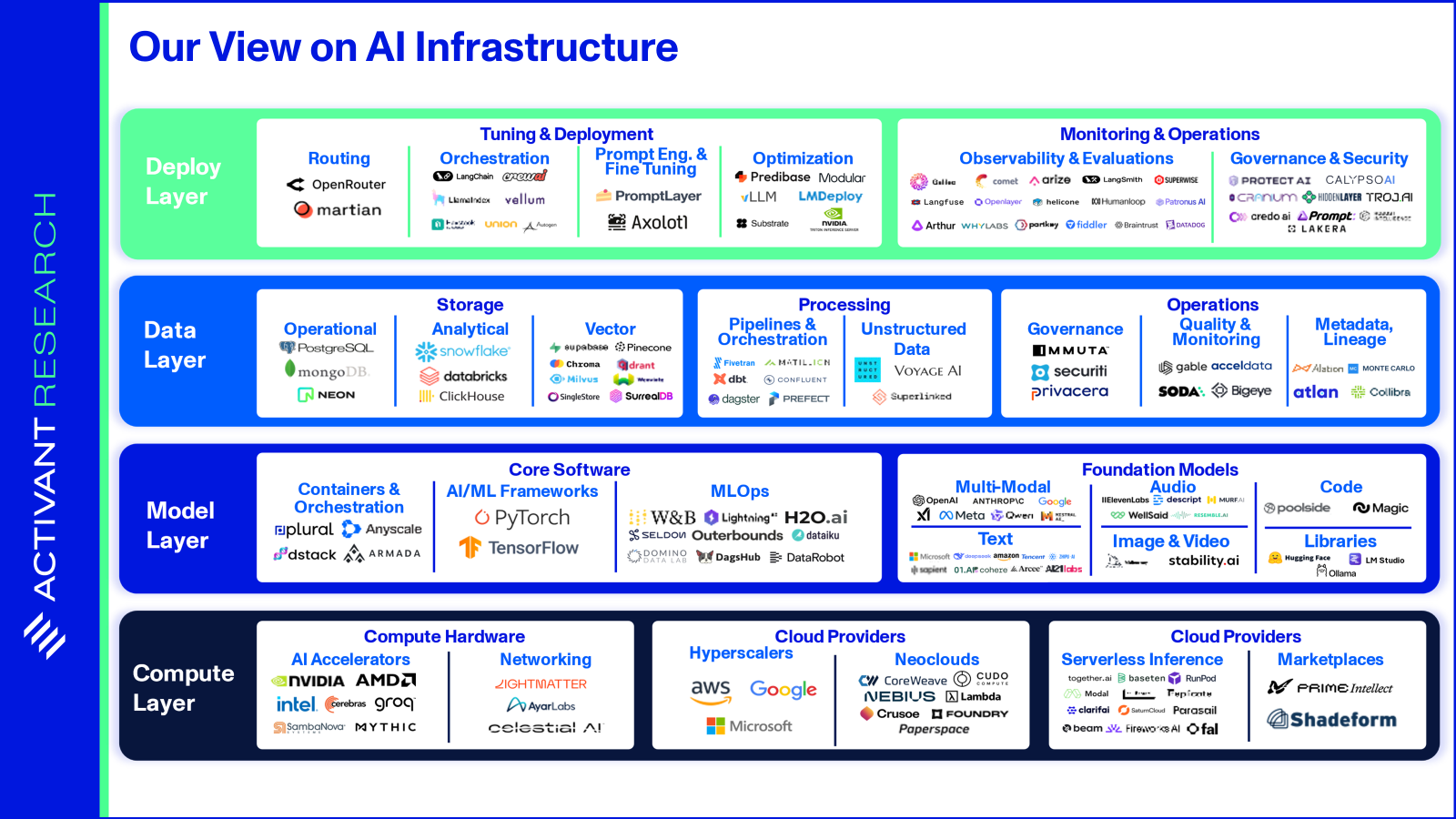

We believe this suggests that an increasing share of the Generative AI market will be captured not by massive models, but by the infrastructure layer that makes these systems possible.

The Status Quo: Dominance of Closed Source Models

When Generative AI is discussed, the names that likely come to mind are OpenAI, (which kicked off the Generative AI revolution with ChatGPT), Anthropic’s Claude, and Google’s Gemini. These companies secured this leadership position in societal mindshare by pairing state-of-the-art model research with a consumer interface, the chatbot, which we have all become so accustomed to. The chat interface turned out to be the perfect way to get this highly sophisticated technology into the hands of end users, including those with limited technical skills. Today, ChatGPT reports ~500 million weekly active users, and OpenAI has crossed $10bn in run-rate revenues. By embedding Generative AI into search, Google’s AI Overviews now reach 1.5bn users.

However, good UX and product engineering alone are not what earned these companies their position. In fact, Google might be exceptionally weak in the product department, relying heavily on its technical strength. AI users are fickle, and leadership is backed up by leaderboard dominance. Consider LMArena, a leaderboard often referred to as the “industry’s obsession” and even referenced in official Google conferences. Since its inception, the top spots have been almost entirely held by Google, Anthropic, and OpenAI.

Not only do these providers offer state-of-the-art models, but they also deliver fully integrated stacks: they deploy the underlying GPUs/TPUs, optimize the infrastructure stack, and wrap it all up so that developers can add AI to their products with a simple API call. Unsurprisingly, there is strong crossover to leading in API inference—OpenAI clearly leads developer mindshare, with 63% of developers using its API.

A lot of noise has been made about open source AI, but one thing is overwhelmingly clear today: the dominant providers are all closed source. Training state-of-the-art (SOTA) Generative AI models is expensive. It’s reported to have cost OpenAI ~$100 million to train GPT-4, a model now far removed from SOTA. Top labs are building clusters of 100,000 GPUs, with capital costs reported to be $4 billion. And infrastructure costs are only 50% - 70% of the known costs, with staff making up the rest. Those costs are also intensifying, with some AI researchers now being offered pay packages worth “nine figures”. Scale is an extreme advantage: modern AI is not a world where some principled developers collaborating in forums can build a competing product, and it certainly looks like closed source providers may continue to dominate.

However, the dominance of a closed source provider in an infrastructure-level technology has little historical precedent. Developers value control, customizability, and the ability to mitigate vendor lock-in—advantages that open ecosystems can facilitate. In machine learning, open source frameworks like TensorFlow and PyTorch dominate; in databases, Postgres and MySQL hold the lead. Open source frequently wins at the infrastructure level.

The real question is whether this will carry over to the market for foundation models, and we analyze past technological shifts for clues in the battle between open and closed models.